Apache Beam - Intro!

What's better than Apache Spark?

Writing Beam pipelines on Spark cluster.

Let’s dive into the Beam world to experience first hand how fluid the design patterns are and how beautiful a job Beam community has done to abstract away the complexities to give the data-engineers a Unicorn, ahem, Unified Programming language.

A Unified Programming language! That’s right - code once, run anywhere, everywhere!! If you have ventured down the path of writing parallel pipelines geared towards specific environment, only to be told to port it to a different environment when it came to production, those painful memories (I have had my share of those working in Genomics field not that long ago) - they are every data engineer’s nemesis.

Finally, Beam addresses this problem by separating the execution logic (runners) from code (pipelines). As of writing, Beam supports,

- Direct Runner (for local development)

- Apache Spark

- Apache Flink

- Google Dataflow

- Apache Samza

- Apache Nemo

- Apache Jet

In addition, Beam supports Java, Python and Golang at the moment and more on the way.

In this post, I share my learnings into a simple pipeline designed with Beam. We will also explore Interactive Beam Visual Display using Jupyter-Notebook.

It is assumed that you have Spark Cluster installed and Jupyter-Notebook running. For more information, see my blog post here.

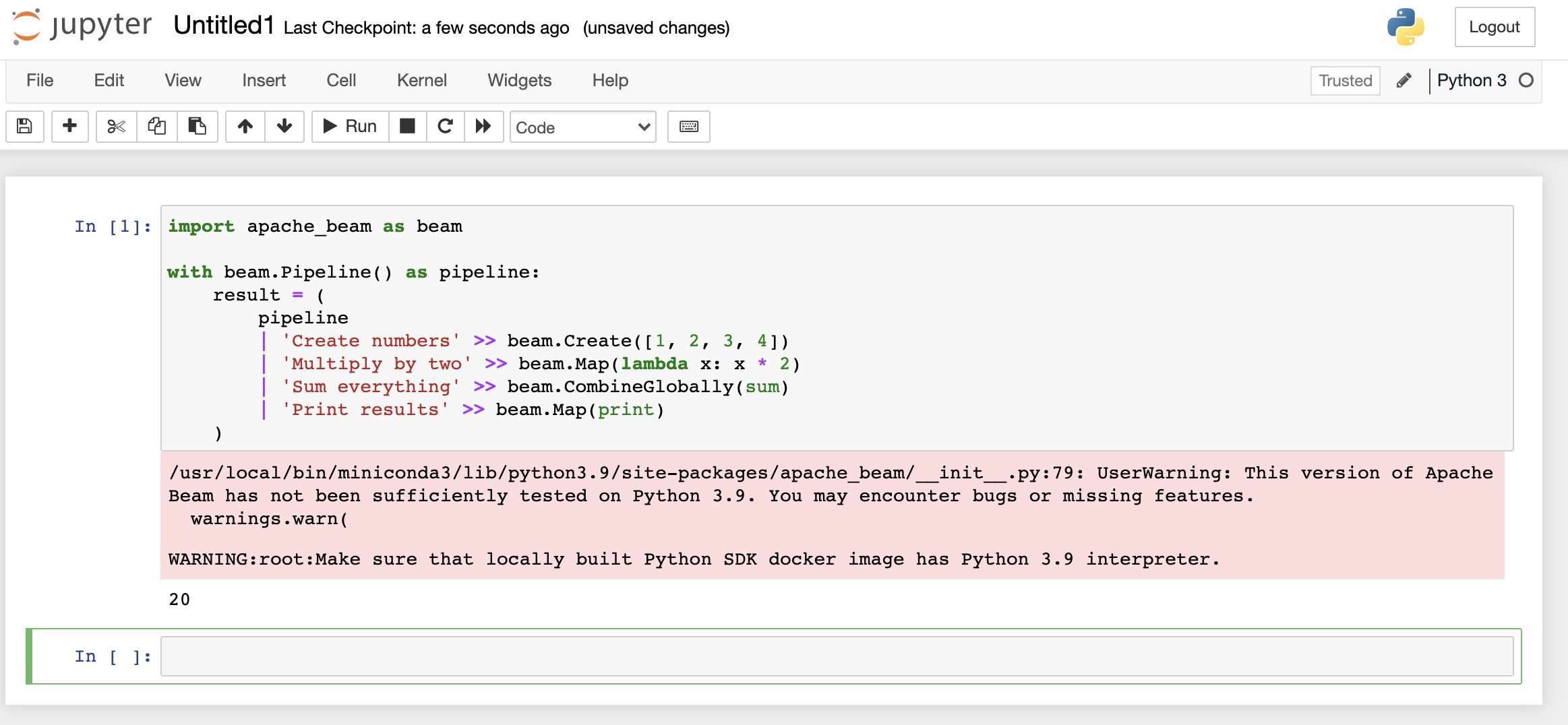

A Simple Pipeline - Sum of Numbers:

import apache_beam as beam

with beam.Pipeline() as pipeline:

result = (

pipeline

| 'Create numbers' >> beam.Create([1, 2, 3, 4])

| 'Multiply by two' >> beam.Map(lambda x: x * 2)

| 'Sum everything' >> beam.CombineGlobally(sum)

| 'Print results' >> beam.Map(print)

)

Interactive Visual Display of Beam pipeline:

import apache_beam as beam

from apache_beam.runners.interactive import interactive_runner

import apache_beam.runners.interactive.interactive_beam as ib

from apache_beam.options import pipeline_options

from datetime import timedelta

ib.options.capture_duration = timedelta(seconds=60)

options = pipeline_options.PipelineOptions()

p = beam.Pipeline(interactive_runner.InteractiveRunner(), options=options)

words = p | 'Create words' >> beam.Create(['Hello', 'there!', 'How', 'are', 'you?'])

windowed_words = (words

| "window" >> beam.WindowInto(beam.window.FixedWindows(10)))

windowed_word_counts = (windowed_words

| "count" >> beam.combiners.Count.PerElement())

ib.show(windowed_word_counts, include_window_info=True, visualize_data = True)

Happy Coding!! ![]()